PROBABLY PRIVATE

Issue # 11: Building Private and Personal AI systems, Exploring ML Memorization

Hello privateers,

I hope you are staying warm and safe -- it's the first snow here in Berlin and we also have no government....

In somewhat related news, I've written a post about the types of opportunities I'm interested in. Do you know an organization, company or team working on similar topics or interests? Feel free to send them my reverse job posting as I'm hoping to get reach outside my direct network. :)

At PyData Paris this year, I was invited to keynote and decided to present on building out privacy-first personal AI systems. As you all know, this is something near and dear to my work that I reference often in my book, but that I haven't yet truly seen come-to-market.

I was inspired by mumblings within Apple Intelligence that seemed to mirror some of the concepts, and I thought it was worth exploring the parallels I see between early computing systems and today's AI. I am a child of the 80s and vividly remember when my family got their first shared PC, but not everyone lived through this evolution.

Early computers were huge, expensive and centralized. They were run by small specialized teams of experts. They usually were funded by large corporations, governments or both. Even when the initial PCs came out, hardly anyone bought them. Why would you buy a thing that you could only use if you knew how to program or could run only specific often specialized software?

That changed as software became more relevant and computers became cheaper and smaller. VisiCalc was a turning point for the Apple II, because it showed that computers could solve normal problems. It was an early spreadsheet software. Many people still love and use Excel and spreadsheets daily, showing that software initially designed in the late 1970s continues to be applicable for real world problems today.

I ask myself (and the PyData attendees): have we reached the VisiCalc moment yet in AI? Although some folks in the audience believed so, I am not yet convinced. I think most AI we have is still hyper centralized, generally quite specialized and doesn't have the versatility to make it viable in 5 years.

You can argue that ChatGPT is the VisiCalc, but we have yet to see massive general adoption (paid users are somewhere around 1 Million a month). There's many more free users, but the query is limited enough that I'm not sure I'd consider that "general adoption". I think more than anything it shows curiosity, which is great, but not the same as a VisiCalc -- I must buy this and use it right away.

You could argue also that Copilot (or similar) are now regularly used for programming, but that's more hobbyist than generalist... And it's still a fairly small paid user group.

What do you think? Are there AI tools you've seen used by a wide swath of people regularly that you believe is the VisiCalc? Do you think it's important that these tools also be available for regular data, not just prompts?

One reason I'm excited about moving towards personalized AI is that it widely expands the types of models and tasks you can support. Instead of building for all users equally and attempting to fit general purpose at scale, you can create specific models, you can narrow down to specific use cases.

If you had a magic wand, what personal AI assistant would you dream up? What rubber duck do you wish for? Or a research assistant? Or what thing do you despise that you wish was actually automated or assisted? How would your current use of AI change if you could steer it yourself, use it with your sensitive data or make your own decisions about what was "good" or "bad" performance?

I'm curious about if something comes next that is generally usable and also where folks can use their own data to better leverage AI for personal use. Maybe it's whatever Apple Intelligence will release next year, maybe it's just the integration of better search or voice assistants in our phones so everyone who wants to use it can use it in an ergonomic and trustworthy way. Or maybe there are even more interesting ideas that haven't yet been developed or explored, but are coming...

To inspire the types of ideas I think we need, I built a local-first, offline RAG using UKPLab's Sentence Encoders for semantic search and llamafiles for the generation part. I open sourced it on GitHub, where I'll also add some additional functionality and other small proof-of-concepts on the types of personal and private AI I think are interesting. If you have an idea you want me to look at or share, please let me know!

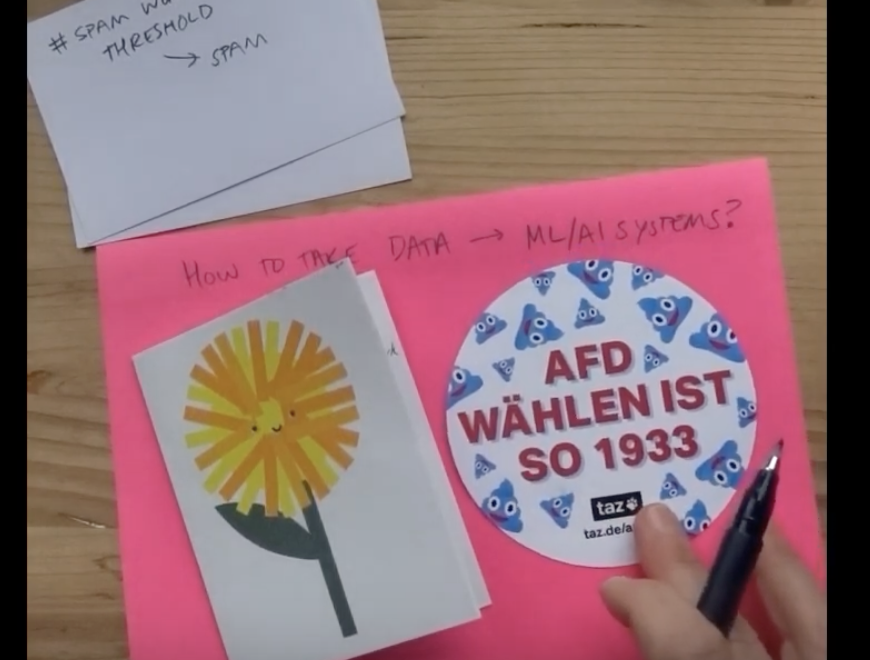

The first two articles and accompanying videos (see screenshot above) are out in my series on exploring the phenomenon of memorization in deep learning.

The first article looks at data collection (and briefly at labeling). A few tidbits for a tl;dr:

The second article looks at encoding data for ML/AI tasks, in summary:

I'll be adding more articles every week, so stay tuned!

I'm curious what questions, ideas and feedback you have on the articles thus far. Is this an interesting topic in your work? What do you think people should learn or understand to evaluate ML systems?

This issue is a bit shorter, as much of my thinking is going into the job search and building out compelling visual storytelling for the memorization series, but I hope you enjoyed it!

I'm always curious to hear your ideas, thoughts, questions and feedback. If you had answers to any of the questions in this issue and you're willing to share, hit reply. :)

With Love and Privacy, kjam

With Love and Privacy, kjam